As AI systems grow increasingly complex and integral to core operations, XAI will transition from a technical consideration to a critical business imperative. AI’s mysterious “black box” nature presents daunting challenges, where even its creators cannot always retrace the logic behind specific outcomes.

For businesses, particularly in high-stakes, regulated industries like healthcare or finance, this opacity introduces high levels of risk. Explainability is foundational for ensuring models operate free from bias and assumptions. It provides the necessary model monitoring and accountability, enabling organizations to validate AI decision-making processes, uphold ethical standards, and foster the responsible use of AI.

What is Explainable AI?

Explainable AI (XAI) comprises processes and tools that enable human users to understand and trust the output of an AI model. Uncovering AI’s inner workings helps convince users that the AI system’s recommendations, predictions, and decision-making are high-quality, fair, and accurate.

This fosters crucial stakeholder confidence, empowers engineers to create better reliable models, and ensures compliance with growing regulatory expectations for fairness, accountability, and transparency.

Why Explainable AI (XAI) Matters in 2026?

In 2026, as AI continues to penetrate workflows across sectors, the principles and methods of XAI become a solution to opaque decision-making, hidden bias, and compliance issues.

Business leaders can count on such explainable AI benefits as increased productivity of their technical/non-technical teams, regulatory risk mitigation, better customer experience, and enhanced brand reputation.

Looking to create an accountable, transparent, and explainable AI system?

Rely on Elinext’s machine learning development services built upon insights from 75+ relevant IT projects.

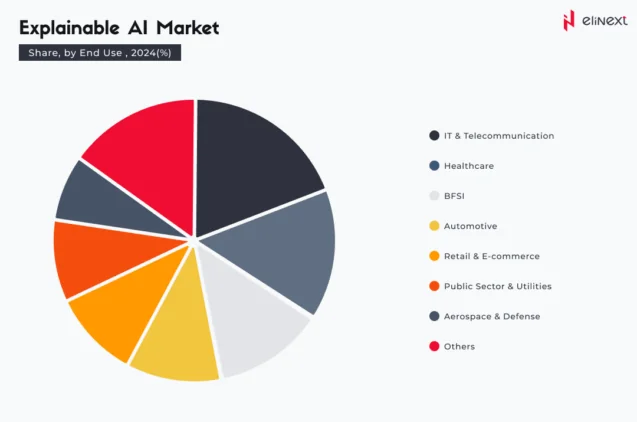

Real-World Apps of Explainable AI (XAI) Across Industries

In sectors where lives or critical operations are on the line, there is no room for black box solutions that do not offer transparency and auditability. Explore the following explainable AI examples to understand how industries operating under strict rules leverage the power of trustworthy AI to solve their complex, high-stakes problems.

Explainable AI for IT Services

Many of IBM’s innovations, including IBM Watson OpenScale, Watson Studio Integration, and AI Explainability 360 Toolkit, support multiple algorithms and explanation methods, enabling the creation of fair, responsible, and explainable artificial intelligence solutions.

Explainable AI for Retail & eCommerce

Leading retailers like Amazon and Netflix leverage explainable artificial intelligence to demystify their recommendation engines. By clarifying why products or content are suggested, these brands enhance customer trust, enable strategic validation, and ensure their personalization models align with ethical standards.

Explainable AI for Telecommunications

Prominent among explainable AI examples is iT-Mobile, which uses AI chatbots to proactively retain customers. The AI identifies early signs of churn (decreased data/app usage, payment timing shifts, etc.) and intervenes with explainable, personalized offers, for instance: “Save $15/month. We’ve noticed you’re using less data and have a more suitable plan that can lower your bill.”

Explainable AI Solutions for Healthcare

At MSKCC, IBM’s Watson Health helps oncologists with cancer treatment decisions by providing them with evidence-based, personalized treatment options. Meanwhile, at Moorfields Eye Hospital, Google DeepMind helps ophthalmologists correct the course of treatment for over 50 eye diseases, also explaining how it comes to its conclusions.

Explainable AI Solutions for BFSI

Explainable AI examples in real life include applications in the BFSI sector, where prominent fintech players like ZestFinance use AI to bring fairness to lending services. In cooperation with Microsoft, ZestFinance has developed ZAML — a suite of comprehensive and thoughtful tools to build, document, and monitor high-performing, accurate, and explainable credit risk ML models.

Explainable AI Solutions for the Public Sector

Immigration, Refugees and Citizenship Canada (IRCC) piloted an explainable AI-based triage system to identify routine applications for streamlined processing. The AI system not only helped reduce processing time but also provided clear, transparent reasons for its assessments, ensuring IRCC officers remained the central decision-makers in the immigration process.

Explainable AI Solutions for Automotive

Tesla employs XAI through its “Full Self-Driving” software its cars run on. The system shows drivers how the vehicle perceives its environment, including pedestrians, vehicles, lane markings, and traffic lights in real-time. This transparency explains the AI’s reasoning and intended actions, building essential trust and understanding for the driver behind the wheel.

Explainable AI Solutions for Aerospace

Real-world explainable AI examples across the aerospace industry include Airbus. Known for its involvement in such responsible AI projects as the TUPLES project and the Confiance.ai program, the company is a strong proponent of trustworthy AI. Airbus states its commitment to apply ethical principles across all technical areas related to AI, i.g, anomaly detection, autonomous flights, and more.

Explainable AI: The Business Benefits You Need to Know

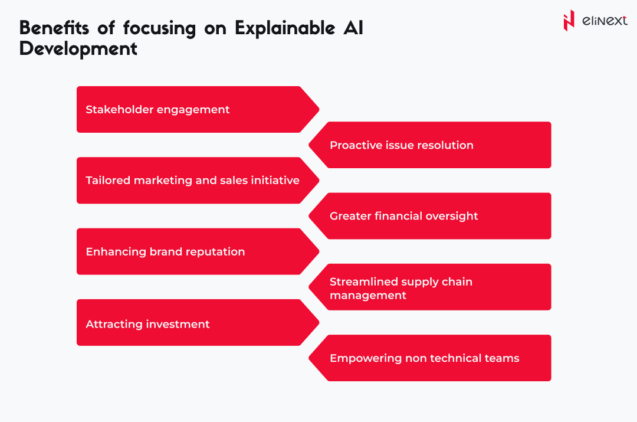

Stakeholder Engagement

For many companies, the inherent opacity of AI models creates a significant trust deficit, hindering their deployment in production. Transparent, interpretable models build trust in results, remove barriers to AI adoption and scaling, and add to the creation of more resilient, long-term partnerships.

Proactive Issue Resolution

Verifiable AI processes let organizations identify and mitigate potential failures, biases, or performance drifts before they escalate, safeguarding critical operations and brand reputation. This foresight is among the critical benefits of explainable AI.

Tailored Marketing & Sales Initiative

By clarifying the “why” behind AI-generated content, marketers can reduce bias in advertising models (e.g., stereotyping, objectification, or diminishment), moving beyond mere segmentation to hyper-personalized engagements that boost conversions and loyalty across different channels.

Greater Financial Oversight

XAI empowers brands operating in industries that have to consider regulatory scrutiny to provide clients with a clear rationale behind automated system decisions (e.g.flagged transaction or declined loan). This transparency enhances compliance efficiency and helps companies exercise adequate financial oversight.

Enhancing Brand Reputation

AI’s black-box reasoning can erode brand trust and create reputational risk. XAI directly addresses this by making AI decisions traceable and auditable. This builds crucial stakeholder confidence, demonstrates a commitment to ethical use of AI, and transforms a potential liability into a key asset for a resilient brand.

Attracting Investment

Venture capital and private equity now heavily scrutinize AI governance. By adopting XAI, you demonstrate control, ethical usage, and long-term viability. This tangible de-risking is among the clear benefits of explainable AI and is a powerful differentiator, directly increasing brand valuation and accelerating investor buy-in.

Empowering Non-Technical Teams

Many leaders recognize explainable AI benefits for demystifying complex models, providing non-technical users with clear explanations for the reasoning these models follow. This enables marketing, sales, and operations teams to move from blind reliance on AI recommendations to data-informed decision-making.

Still can’t trust AI with high-stakes decisions, limiting it to low-impact tasks?

Reap the full benefits of explainable AI with Elinext, a Clutch-recognized software development leader with a portfolio of 150+ AI projects that knows how to develop and use this technology safely and responsibly.

How Elinext Supports Explainable AI Adoption

Unlock the transformative potential of explainable AI benefits with Elinext, an experienced tech partner backed by almost three decades of IT expertise. The breadth of our AI offerings spans Business & Technology Consulting, Audit of AI/ML Algorithms, Machine Learning Development, Neural Network Development, AI software Integration, and more.

AI Development Consulting

As a top-rated artificial intelligence development solutions provider, Elinext brings a plethora of talents to the table, including AI consultants, PMs, AI developers, and ML engineers to offer support for matters like initial project estimate, tech stack selection, team consumption, development roadmap planning, security and compliance, and much more.

Audit of AI/ML Algorithms

At Elinext, we can assess the entire lifecycle of AI algorithms — from data preparation to model development, deployment, and change management, operational efficacy, compliance, and fairness — ensuring your AI systems are lawful, ethical, and robust.

Machine Learning Development

Entrust the development and implementation of machine learning models to a tech partner having 75+ successfully delivered ML projects. Our technicians have built custom ML models using Django, OpenAI, TensorFlow, Torch, Keras, YOLO, etc.

Neural Network Development

Operating on a multinational scale, Elinext helps businesses from 16+ industries leverage the benefits of explainable AI through its holistic neural network development services. We are expert builders for neural networks to detect financial fraud, evaluate medical conditions or diseases, predict equipment failures, and more.

MLOps Transformation

Looking for an IT service provider who can implement MLOps best practices to ensure an efficient ML lifecycle? Turn to Elinext. Our disciplined approach ensures your models are robustly integrated with production data and business applications, continuously monitored, and scaled effectively.

Generative AI Solutions

Tap into the explainable AI benefits by incorporating ethical generative AI tools developed by Elinext’s prevetted computer scientists and engineers. Generate new content — including images, text, code, audio, and videos — in seconds while noticeably reducing costs.

Integration & API Services

Embed XAI capabilities into your systems in a cost-efficient way by hiring the services of an IT service provider boasting a deep understanding of AI and API technologies. We will evaluate your enterprise’s data sources Internet of Things (IoT) solutions, scraped web data, etc.) and business apps and build a scalable API infrastructure to support your AI initiative.

Industry‑Specific AI Solutions

No matter what your industry is, the Elinext team is ready to help you harness explainable AI benefits with custom domain-specific intelligent software engineered considering ethics, resilience, security, privacy, and legal requirements.

“While AI technology is becoming progressively invasive, the predominant part of companies worldwide still can’t trust AI with critical decisions, missing its full potential. It doesn’t have to be that way.

At Elinext, we rely on the collective experience of machine learning engineers, data scientists, risk specialists, and lawyers, as well as the insights from our 150+ AI implementations, to create transparent and trustworthy intelligent systems that give users real confidence about decision-making.”

Maxim Dadychyn, Head of Generative AI

Explainable AI Solutions: Outlook for 2026

The trajectory for 2026 points to explainable AI transitioning from an optional technical consideration to a matter of imperative necessity for enterprises worldwide.

As regulatory frameworks mature and AI integration deepens within compliance-intensive sectors like finance or healthcare, the ability to audit and trust AI decision-making becomes paramount. Organizations will increasingly prioritize solutions that move beyond post-hoc rationalizations to offer genuine, real-time transparency.

Capture transformational benefits of explainable AI with an expert IT services supplier boasting expertise in engineering conversational, generative, and agentic AI solutions.

Conclusion

In 2026, explainable AI will become a non-negotiable cornerstone for business success. As AI integration deepens, organizations face a critical trust deficit, with stakeholders demanding transparency and regulators tightening AI oversight. XAI directly addresses this by demystifying AI decision-making, mitigating operational and reputational risks, and fostering the user confidence required for widespread adoption.

For business leaders, the creation of reliable, explainable, and fully auditable AI systems requires adopting a holistic approach that embeds governance, ethics, and technical rigor into each phase of software development.

FAQ

What is explainable AI (XAI)?

Explainable AI is a set of tools and practices to create reliable, explainable, and auditable AI models that build trust and enable users to confidently understand and manage their AI-driven decisions.

Why is explainable AI important for businesses?

A glass-box AI model that is auditable and aligned with regulatory standards helps confidently manage the risks of mission-critical decisions and protect businesses from commercial failure, regulatory breaches, and reputational damage.

How will explainable AI impact business operations in 2026?

In 2026 and beyond, explainable AI will be fundamental to elevating customer experience, building user trust, ensuring regulatory compliance, and scaling AI, moving it from an experimental tool to a core, trusted business asset.

How can businesses adopt explainable AI effectively?

Businesses should embed explainable AI by design, integrating continuous model monitoring and cross-functional governance to ensure real-time explainability, compliance, and effective risk management.

What are the benefits of explainable AI for decision-makers?

Explainable AI gives decision-makers the ability to determine how AI decisions were made, ensuring that complex, high-stakes problems are solved with necessary human oversight, critical thinking, and ethics.

Is explainable AI more expensive or slower than traditional AI?

While explainable AI may require greater initial investment in testing and governance, it mitigates far costlier risks of compliance failures and reputational damage from opaque systems. It can be a little slower than traditional AI, but this is often a strategic trade-off for greater value and risk mitigation.