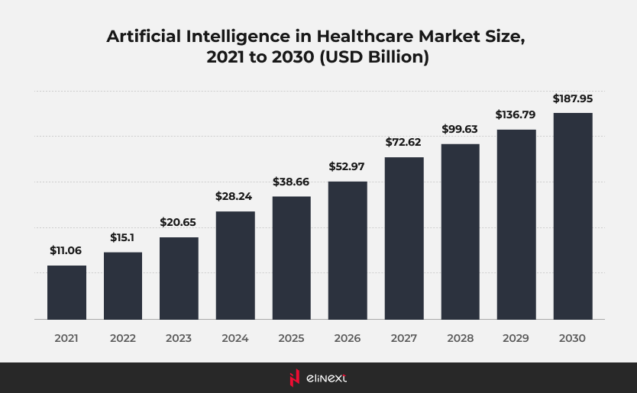

Artificial intelligence (AI) has transitioned from a concept of the future to a vital force behind innovation in various sectors, particularly through the use of artificial intelligence development solutions. As of 2025, the implementation of AI is rapidly increasing, with companies utilizing machine learning, natural language processing, and automation to enhance efficiency, improve decision-making, and elevate customer experiences. According to McKinsey, a significant 72% of companies have integrated AI into at least one aspect of their operations.

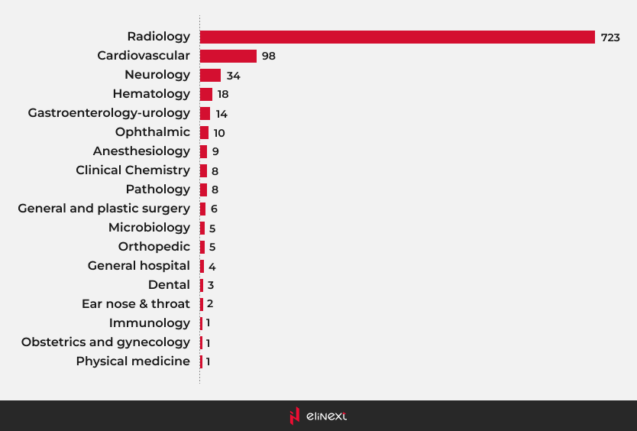

Most AI-enabled medical devices were in radiology

Most AI medical devices are primarily found in the field of radiology. These AI medical devices utilize advanced algorithms to analyze medical images, enhancing diagnostic accuracy and efficiency. By incorporating AI in medical devices, healthcare professionals can detect conditions such as tumors or fractures with greater precision. This integration not only streamlines workflows but also improves patient outcomes, revolutionizing the way radiology operates.

Use Cases of AI in Medical Devices

The AI medical devices market is rapidly expanding, with numerous applications emerging. AI-driven medical devices are used for early disease detection, personalized treatment plans, and predictive analytics. These innovations enhance patient care and improve operational efficiency across healthcare settings.

Enhanced Device Accuracy

AI medical devices significantly improve accuracy in diagnostics and treatment. By leveraging machine learning algorithms, these devices can analyze data more precisely, leading to better patient outcomes and reduced errors.

Increased Production Processes

AI and medical devices are transforming production processes by automating workflows and streamlining operations. This integration boosts efficiency, reduces costs, and accelerates the delivery of innovative healthcare solutions.

Improved Patient Outcomes

Patient management solutions are enhanced through AI integration, leading to better monitoring and personalized care. This results in improved patient outcomes, as healthcare providers can make informed decisions swiftly.

Challenges and Mistakes in AI Adoption for Medical Devices

The adoption of AI-based medical devices faces challenges such as data privacy concerns and integration issues. Mistakes in implementation can lead to misdiagnosis, impacting patient safety and trust.

Challenge #1:

Lack of Regulatory Compliance

A critical aspect of developing AI for medical devices is ensuring regulatory compliance. The healthcare industry is highly regulated, with manufacturers needing to follow extensive guidelines. In the US, the FDA oversees these regulations, while the UK government, via the Alan Turing Institute, promotes responsible AI implementation. France’s National Consultative Ethics Committee emphasizes transparency and informed consent, and Germany’s Bundesministerium für Gesundheit manages healthcare policy. With regulations frequently updated, it’s vital for medical device producers to stay informed. Non-compliance can lead to costly delays, rejections, or legal issues, making regulatory adherence the top challenge for manufacturers.

How to overcome:

To overcome the challenge of regulatory compliance in AI-based medical devices, companies should appoint a dedicated individual or team knowledgeable about relevant regulations. This person will keep abreast of regulatory changes, interpret the requirements, and ensure that the company’s practices align with applicable standards for compliance.

Challenge #2:

Insufficient Data Quality and Quantity

AI in medical devices relies on vast amounts of high-quality data for training and validation. To ensure effective software development, devices must provide detailed and unbiased data. Feeding AI algorithms with poor-quality data can lead to security and privacy issues, errors, inefficiencies in electronic health records, and long-term consequences. It’s crucial that all medical data accurately represents the target patient population to guarantee optimal AI algorithm performance across diverse demographics. Neglecting data quality and quantity can result in biased algorithms, compromising both patient safety and treatment effectiveness.

How to overcome:

Investing in data management technologies and preventive safeguards is crucial for AI in medical devices. Healthcare providers should focus on human biometrics solutions to tackle data issues. Consultants and tech specialists must monitor data collection, storage, and usage to ensure quality. System safeguards can prevent errors and maintain data integrity, flagging duplicates for verification. Regular training for staff on data quality helps them understand the impact of mistakes on data reliability, making ongoing education essential in leveraging AI for medical devices effectively.

Challenge #3:

Lack of Transparency and Understanding

The “black box” nature of AI algorithms can hinder their acceptance in the healthcare industry. Medical professionals and regulatory bodies require transparency and explainability to trust AI-driven medical devices. Without prioritizing these aspects, the adoption of AI in the medical devices market may be limited, raising ethical concerns. Ensuring that AI and medical devices are interpretable is crucial for fostering trust among users and regulators alike. As the market for AI medical devices grows, addressing these transparency issues will be vital for successful integration and widespread acceptance.

How to overcome:

Software development companies should prioritize creating AI models for medical devices that offer clear explanations for their decisions. Techniques like model interpretability, feature importance analysis, and decision boundary visualization enhance AI-based models’ explainability. The term “Explainable AI” gained popularity by 2023 for good reason. Model interpretability provides insights into how AI processes data to make predictions, helping manufacturers and medical professionals understand decision-making factors. This understanding is crucial for seamless operations and trust in AI for medical devices.

Challenge #4:

Neglecting Data Privacy and Security

AI-powered medical devices handle sensitive patient data, making data privacy and security critical. Compliance with regulations like the General Data Protection Regulation (GDPR) and HIPAA in North America is essential. Custom healthcare software development companies must implement robust security measures to protect patient information from unauthorized access, ensuring trust and compliance.

Neglecting data privacy and security can result in loss of patient trust, legal repercussions, and damage to the reputation of the medical device and the software development company.

How to overcome:

To mitigate risks in AI for medical devices, software development companies must prioritize data privacy and security during development. This includes implementing encryption, access controls, regular security audits, and employee training on data protection. Establishing incident response plans is essential for effectively addressing breaches. Adhering to strict security measures and complying with data protection regulations is vital for maintaining patient trust and privacy.

Challenge #5:

Limited Interoperability and Integration

Neglecting interoperability in AI in medical devices can lead to siloed data, inefficient workflows, and hindered adoption. Medical devices must seamlessly integrate with existing healthcare systems, like electronic health records (EHRs). When devices can’t share data, valuable patient information remains isolated, hampering comprehensive care. This isolation creates challenges for healthcare providers, who may lack a complete view of a patient’s history. Additionally, manual data transfer between systems is time-consuming and error-prone, impacting patient care and increasing administrative burdens.

How to overcome:

Standardization, APIs, data harmonization, and ongoing monitoring are vital for interoperability in AI for medical devices. Establishing industry-wide standards for data formats and communication protocols is crucial. Organizations like HL7 and DICOM play key roles in developing these standards. AI-powered medical devices must adhere to them for compatibility. Well-defined APIs facilitate data exchange between systems, while harmonizing data elements—such as patient demographics and clinical measurements—ensures seamless integration. As technology evolves, continuous improvement is essential to address interoperability challenges effectively.

The Future of AI in Medical Devices

The future of AI in medical devices is set to transform healthcare, driven by advanced ML development solutions. These innovations will enable more accurate diagnostics, personalized treatment plans, and real-time patient monitoring. As ML algorithms improve, they will analyze vast datasets, uncovering patterns that enhance decision-making and outcomes.

Furthermore, integration with electronic health records will streamline workflows, fostering collaboration among healthcare providers. However, addressing challenges like data security, interoperability, and regulatory compliance will be crucial to ensure that AI-powered devices can be trusted, effective, and widely adopted in the healthcare landscape.

Conclusion

To fully realize the benefits of AI-based medical devices, it is essential to overcome common challenges in their adoption. Addressing data privacy concerns, ensuring interoperability, and adhering to regulatory standards will foster trust among healthcare providers and patients. Continuous training for medical professionals on these technologies will enhance usability and acceptance.

Collaborative efforts among developers, healthcare organizations, and regulators can create an environment that supports innovation while prioritizing patient safety. By tackling these challenges, we can unlock the transformative potential of AI-based medical devices in improving patient care and outcomes.

FAQ

Why is regulatory approval such a big challenge in AI for medical devices?

Regulatory approval is challenging for AI in medical devices due to complexities in ensuring safety, efficacy, and compliance with evolving standards. AI’s adaptive nature complicates traditional evaluation processes, requiring robust validation.

How can AI systems in medical devices maintain data privacy and security?

AI systems in medical devices can maintain data privacy and security by implementing strong encryption, access controls, regular audits, and compliance with regulations like GDPR and HIPAA to protect patient information.

How can healthcare providers trust AI-driven medical devices?

Healthcare providers can trust AI-driven medical devices by ensuring transparency in algorithms, validating performance through rigorous testing, adhering to regulatory standards, and providing ongoing training.

How can medical device manufacturers prepare for AI adoption?

Medical device manufacturers can prepare for AI adoption by investing in R&D, understanding the AI medical devices market, ensuring regulatory compliance, and fostering partnerships for technology integration.