Project Background

Elinext was contacted by a large call center and was asked to build an emotion detection software that could recognize emotions in speech. The client wanted to get an application that:

- Would allow call-center operators to automatically detect the emotions of callers and then act accordingly. For example, to direct calls of angry clients to their more experienced colleagues.

- Would allow detecting customers' emotions of happiness, sadness, and satisfaction.

- Would allow management to know when it is time to substitute a tired operator by detecting and evaluating their emotions.

Challenge

Elinext teams faced a challenging task to develop an application with voice analysis that would provide a call center with the ability to organize the working process in the most efficient manner, by understanding what their customers and employees really feel and think.

Project Description

The development process was organized around the creation and training of neural networks. The main steps our teams made to build the solution included:

- Preparing data

- Extraction of sound features

- Creation of neural networks

Development Process

Each of the above-mentioned steps is described further below.

- Preparing data

To prepare data for models training and testing, our developers took advantage of Crowd-Sourced Emotional Multimodal Actors Dataset (CREMA-D) for data training, as well as records of the call center for testing.

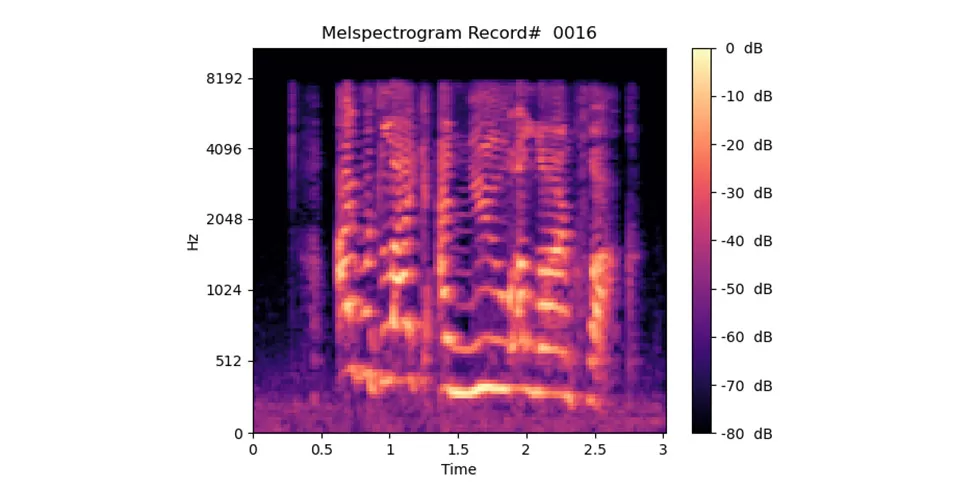

- Extraction of sound features

Using LebROSA package, our team got a 192-dimensional features vector for every audio file.

- Creation of Neural Network

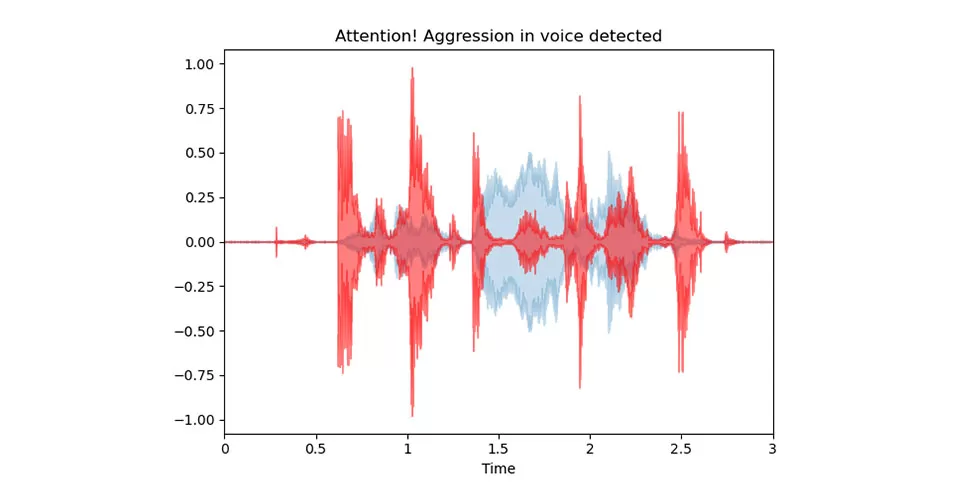

Based on Keras and Tensorflow, our teams built neural network models from scratch, adding and widening their layers during the development process. We tested different models, including Multiplayer Perceptron (MLP) and Convolutional Neural Network (CNN).

Also, our developers tested different architectures for models, sometimes starting from those that were used in research papers of similar projects. All in all, each model choice was based on the accuracy of classification in the validation sample.

As the result, our developer decided to use CNN architecture.

Results

Elinext team successfully created an Android application that recognizes emotions in speech and allows call center workers and their managers to get insights into what people really feel.

The same systems could be utilized by companies offering security services, support services, and rescue services.