Challenge

The company had recently launched an online store. The website team spent a lot of time answering shoppers’ questions and moderating reviews and comments. Unsurprisingly, some people ignored the website’s rules and common ethics, using derogatory language and insults in their posts.

The best way to solve a problem like that? Automation. The company wanted to use artificial intelligence (AI) to build a hate-speech detector and an FAQ bot. So it looked for a developer who could do that and came across Elinext. We were selected for the job because we had the required skills and resources at hand.

Solution

Elinext built a small Agile team and dove right in. First of all, we looked at the most advanced natural language processing (NLP) technology available. Our findings suggested we should treat the design and development of the chatbot and the hate-speech detector as two parallel machine learning (ML) projects.

Hate-Speech Detector

We needed to build an ML model that would identify inappropriate user-generated text. The model required a dataset, a selection of stop words and phrases, to be trained on. And that is something our client as a new company didn’t have.

The best solution was to find a publicly available hate language dataset. After considering several options, we chose HateXplain.

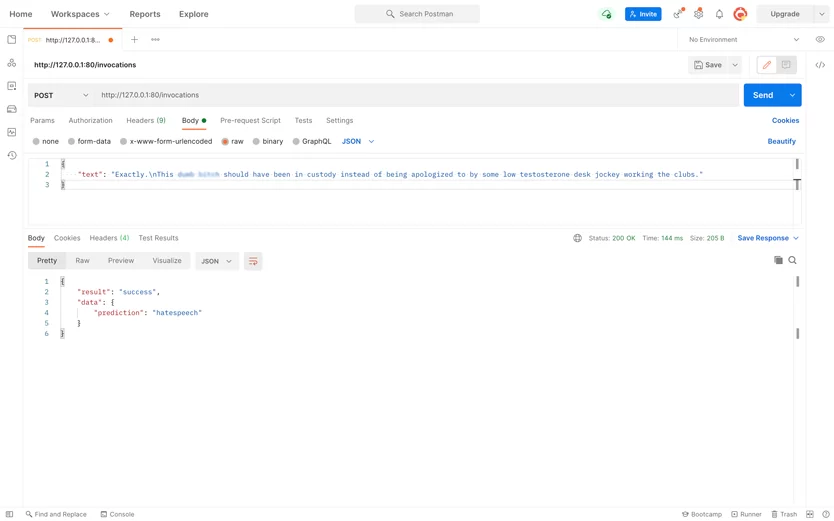

We created a GitHub repository and placed the dataset there alongside an open-source AI: Generative Pre-trained Transformer 2 (GPT-2). Then, we automated the AI training on the dataset using Amazon Elastic Compute Cloud (Amazon EC2) running on Nvidia GPU.

The perspective web service’s code became the final element of the repository. Once it was uploaded, the application automatically deployed the service as a Docker container alongside the model’s binary files. The latter will allow the client to analyze and debug the application in the future.

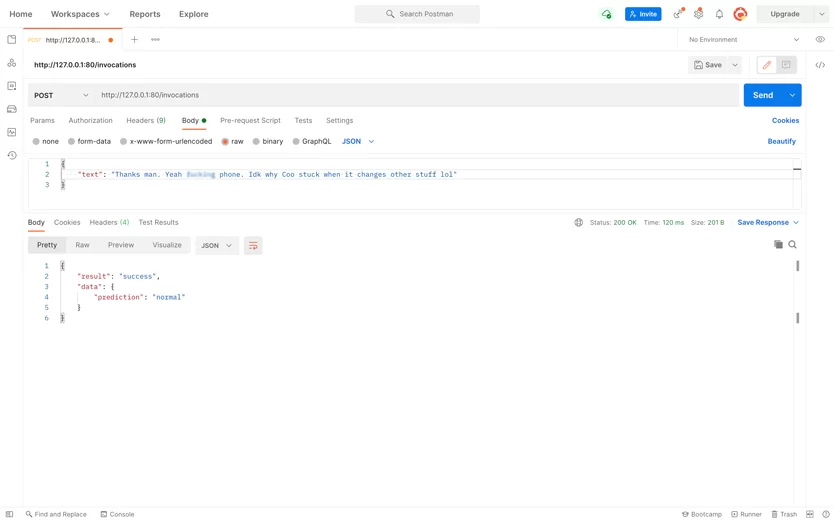

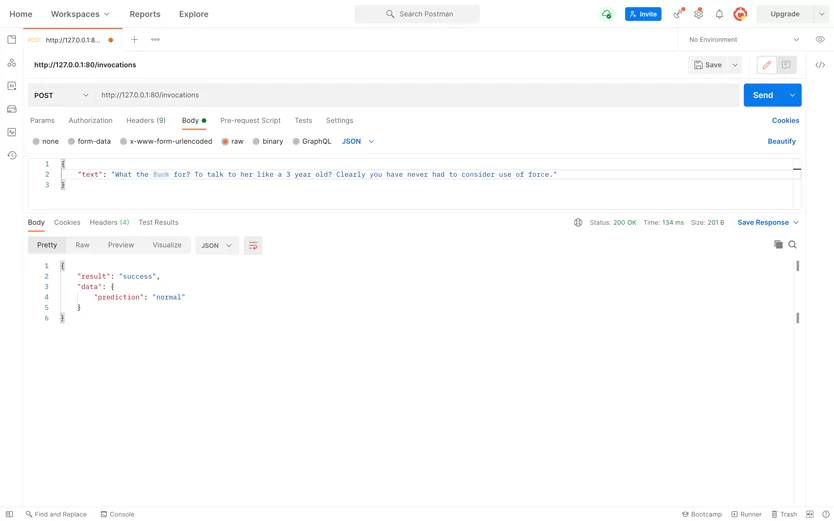

The process is launched by starting up the HTTP server hosting the GPT-2 model and the model tokenizer. Once the server receives a piece of text submitted by a user, the tokenizer splits the text into semantic units to be digested by GPT-2.

Then, the semantic units are run through the softmax function. The function classifies them and the system marks the input text as normal, hateful, or offensive for the website administrator.

We automated the process from A to Z by using virtual servers in GitHub Actions, saving both ours and the client’s hardware.

Interestingly, the AI can discern between hateful messages and ones that just use strong language. It can also tell apart aggressive rhetoric from slang words. The overall accuracy of the model is around 68%, which means it can slash moderation time.

FAQ Helper Bot

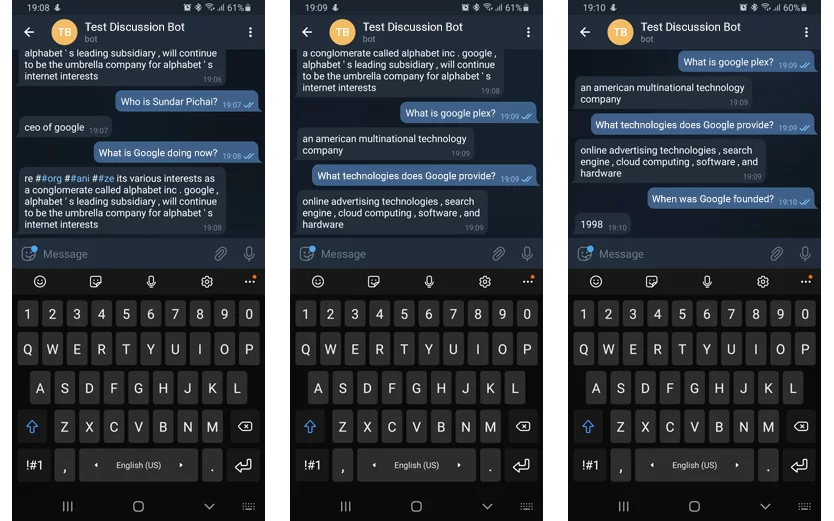

The FAQ bot was needed to answer eCommerce-related user questions. That means we had to put together a knowledge base, enabling the bot to understand user queries and search for information in the base.

We made the bot understand queries using a pre-trained English language recognition model: Bidirectional Encoder Representations from Transformers (BERT).

But before being recognized, the text needs to get into the system. How does this happen?

We configured the HTTP API and Telegram API, enabling two options. First, the website visitor opens a chat window to chat with the bot. The bot will also suggest they switch to Telegram and send them a link to the chat.

The web server will process the query and look up information in the context prepared by a website administrator. Administrators can modify, enrich and replace the context.

Of course, people sometimes make typing or spelling errors. But our bot will still interpret the query correctly (unless it’s an indigestible mess of letters) and provide an answer. Thus, the process feels similar to chatting to a trained operator.

Result

In two weeks, we created an application that saved the client hundreds of website administrator work hours.

Moreover, shoppers are not left plodding through a long FAQ split into multiple sections. Instead, they can ask the chatbot whatever interests them and immediately receive an answer.

The two applications we’ve built have saved the client time and money and streamlined the path to purchase.