Client

A Danish-American telecommunication software provider commissioned Elinext to develop a web application for managing networks and routers remotely.

Challenge

Internet providers have to use a lot of customer support workforce to help customers solve their connection issues. Every issue takes a fair amount of time to handle because operators need to explain to customers what needs to be done with their router.

One Danish-American company set out to change that. They came up with an idea for web software that would give operators access to customers’ routers and enable them to take action directly, in real-time. Nothing like that had ever been done before.

The company looked for a developer and came across Elinext. We had a lot of experience in real-time processing and Big Data, so our team turned out to be a good fit for the project.

Process

From the moment the project took off, we have been using the Agile methodology and direct communication with the customer’s team a few times a day. Sometimes we conduct meetings and debug sessions which may be lengthy (up to 4 hours), albeit always very productive.

We started by implementing cloud adapters for routers (CPE) and firmwares which our client’s C++ developers had already been working on. In parallel, we created adapters for third-party routers and made common APIs for all items. We also added a Kubernetes cluster which could be deployed on any platform.

During the latest stage, we enabled the software to collect and analyze 1,000,000 data points per second in real time.

We built the MVP quickly, in just two months, and then in four more months we brought the fully functional product to completion. And implementing AI and ML took 1.5 years from the project start.

Tuning Up the Messenger Broker

The client had been using the Apache Pulsar messenger broker. And their developers had been scaling it the wrong way, which we had to turn around. Eventually, we managed to tune the broker up but the client had to change something in their firmware.

Data Simulation

It is difficult to predict how each device will behave depending on its environment, as even particular wall materials may affect the signal quality. On top of that, we needed to generate 1,000,000 payloads per second. All that made simulating the behavior of the system a bit of a challenge.

What we did was use a separated cluster on Kotlin specifically for these cases.

Testing

We only did automated quality assurance. Manual tests were the remit of our client.

Product

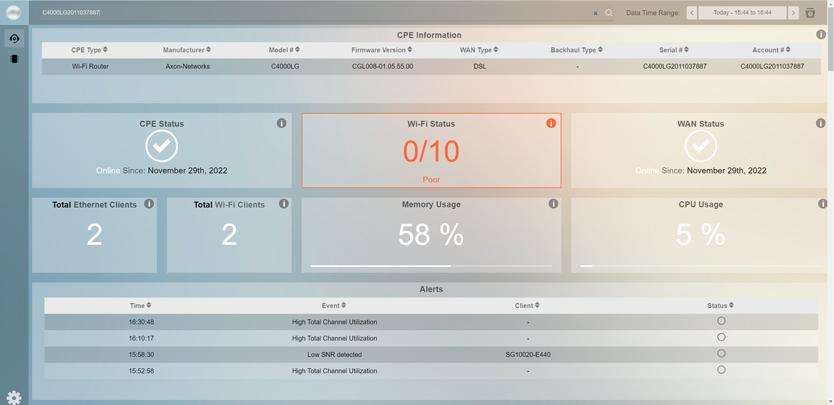

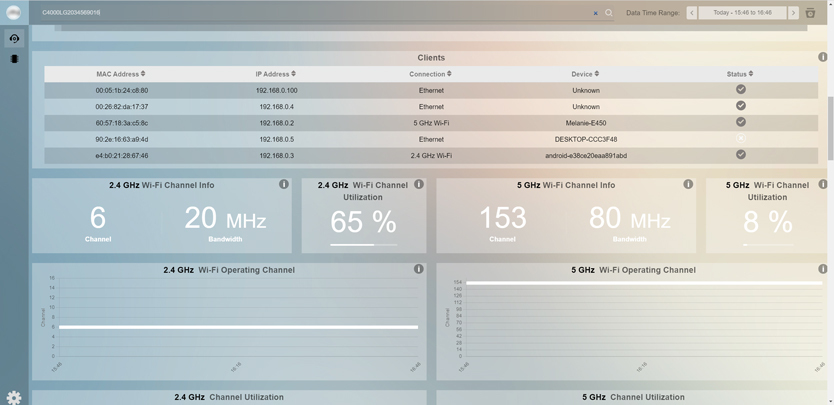

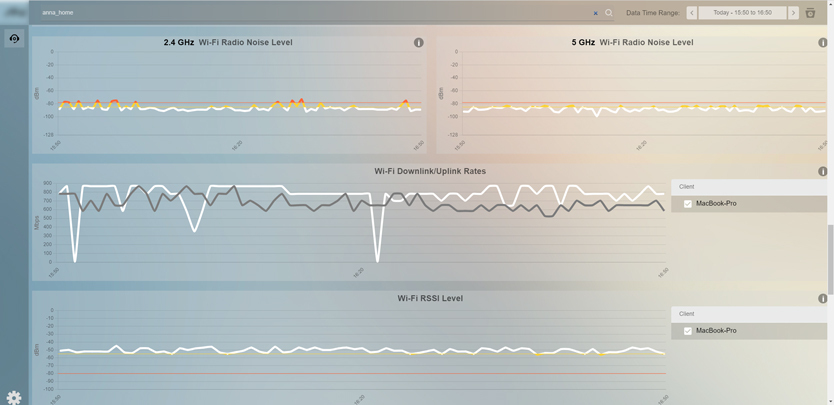

The system we built is a unique software platform for seamless subscriber management and network visibility. It consists of a website, a set of analytics tools and a command-line interface.

We also made the system compliant with telecommunication standards like TR-069, TR-181, TR-369, TR-089, TR-106, TR-131, TR-135, TR-140, TR-142, TR-143, TR-157, TR-196, TR-330, TR-247 and TR-255.

The application elevates performance over basic connectivity via end-to-end visibility of the entire network. Identifying issues in real-time improves customer satisfaction, retention and quality of experience.

Through common APIs, the application can be seamlessly integrated with existing operational support systems and business support systems used by our client’s customers. The Customer-Premises Equipment (CPE) doesn’t have to be our client’s device.

The application provides a wealth of service options and customizations for Internet service providers.

Deployment

We used a Kubernetes cluster with Java microservices and React for GUI. Using microservices allows our client to support huge data throughput and scale inexpensively. In addition, it helps support real time analytics for any payload which we receive.

To allow operators to check network health, configure alerts and use traceability, we paired Grafana with Prometheus.

Microservices

The system consists of several microservices, each with a specific purpose.

- The Ingestors services convert data models from any router to the TR181 model, which is standard for the industry.

- Adapters process the TR181 model and create metrics (time series data) based on it. Metrics look like a point with a timestamp and a value like CPU usage or signal strength at a particular period of time.

- The CPE Control module allows the operator to specify commands for the device – this can be done right after the endpoint has been called. The commands span device reboot, factory reset or specifying passwords for access points.

- With the Firmware Upgrade module, operators can schedule firmware upgrades for any device connected to the platform.

- As part of real-time analytics, statuses show whether a particular router is healthy or not. The operator can also check specific router parameters over the cloud infrastructure.

- Users sometimes call support when their WiFi signal is poor. The Alerts module will notify the operator of a problem and suggest fixes before that happens.

- The Dashboard module allows operators to configure over 100 widgets available with the application to handle particular types of upcoming data.

- Another useful feature for operators is Session Manager. Using it, they can debug routers that are connected to the cluster.

- Finally, the advanced module that took us the longest to build is the ML/AI Cloud. It is instrumental to further development of the product. Our client can use the Cloud to train the artificial intelligence model to meet their customers’ prospective needs.

Storage

We used PostgreSQL to store static values and constants, whereas MongoDB is in place for time series data. Cache is distributed and is enabled with Redis. And for historical data we used AWS S3.

Message Broker

The system relies on Kafka for most cases. But when it’s not enough for brokering messages, Apache Pulsar is used.

Results

It took us about 18 months to develop the fully functional, AI-powered system. It now processes around 1,000,000 payloads from 1,000,000 routers per second. And soon after it went live, operators using it were able to reduce their costs for customer support and improve customer communications.

This project was also a great educational experience, as we learned to work with a tool that was new to us: Apache Pulsar. And we continue to improve the product going forward. Adding further industry standards and supporting new routers are next in our pipeline.