Client

Our client is a Canadian service provider that enables organizations in North America to unlock the value of sensitive data for various purposes without compromising personal information. They specialize in de-identifying data and creating better, faster, cleaner datasets that can meet specific needs for secondary use such as research and analytics.

With the help of our client, companies have proven technology to enable usable data that can be safely linked and put to work in compliance with global regulation and backed by auditable proof.

Project Description

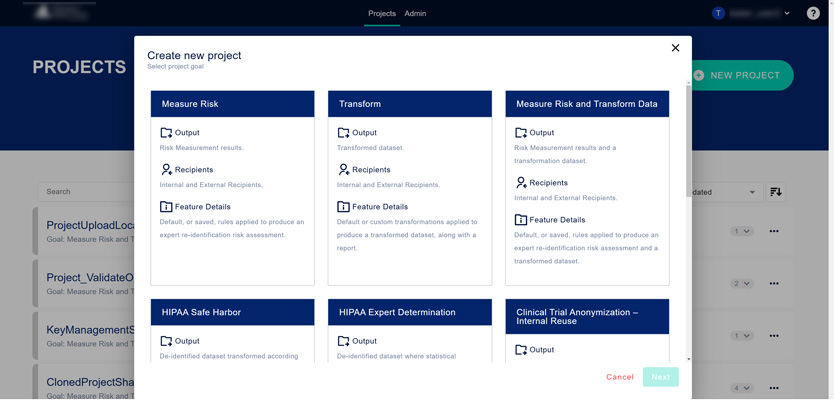

Elinext delivered healthcare software development services to this customer on a couple of long-term projects. The first one is an application that could have been installed locally in standalone or cluster mode. The software supported AWS installation and used Apache Spark to transform large datasets with billions of records at a fast pace. It has its own exclusive UI.

The second one is an app with several important services (Treatment, Catalog, Configuration, Orchestration-AirFlow) that supports only AWS installation and has its own unique UI.

Our teams provide Automation and Manual QA services for these projects. As qa in healthcare is our specialty, we’ve been their go-to qa testing company since Q2 2017.

Challenges

The initial request from our customer was to receive the Automation QA support for their product. Manual QA wasn’t enough, and the customer was faced with several defects in the application. The idea was to have a strong CI/CD and to identify the bugs as soon as possible.

The Elinext team noticed poor CI/CD processes right away on their side.

We provided 2-3 Automation QA and started to use NodeJS-binary recommended by Google for testing Angular applications.

Our initial challenges haven’t altered that much and looked like this in the first place:

- Improvement of the product’s stability

- Minimizing the defects on the customer side

- Establishing pure CI/CD processes

- Testing different environments

During the work, those challenges arose unexpectedly, and we had to deal with them:

used framework for testing (Protractor). The customer asked for it, because at that time it was a pri

- Migration from the initial test framework (Protractor) due to its deprecation.

- Issues with connections to the environments onto AWS.

- Managing accounts in the Teams. We still have some issues between our and customer’s scopes.

Process

Our team uses common approaches to balancing between manual and auto testing. We have a kind of picture in our mind of how classic CI/CD should be. Some changes were made during the implementation of the solutions

With time, we’ve updated the version of the framework, and improved reports, e.g. added some required data about release versions, etc. In some cases, the Elinext team improved our algorithms for the verification of the product.

For testing, we use separate test environments. In some cases, it includes dedicated auto-testing, manual testing, vulnerability testing, etc. Worth noticing, that, certainly, we use only test users and don’t operate with real customer data.

As for communication, we have daily stand-up meetings, emails, and chats in Teams, Retro, and Demo sessions. Our experienced engineers and project managers would describe the cooperation as a “rather close” one.

The usual process of testing new features looks the following way:

Stage 1: New Epics and Stories on the developer’s side. Developers started to implement the functionality of new features. If possible Manual QA team start to create test cases for these stories, if not later.

Stage 2: All test cases regarding the new feature were created. Functionality is in the Product.

Stage 3: Test cases are able to be automated and pushed in the ‘queue for automation’ (Automation team backlog)

Stage 4: Test cases are automated. Marked in the Zephyr plugin as automated, to avoid manual execution. Test cases are included in the appropriate suite and become part of the daily CI process.

Stage 5: Monitoring the results of daily CI. In case of good results, provide reports for the customer. In case of failure, investigate, raise defect(s) or update the test case, or source code.

Initially, all the activities were run by a dedicated Automation QA team, later the team grew with Manual QAs and then some Developers.

Solution

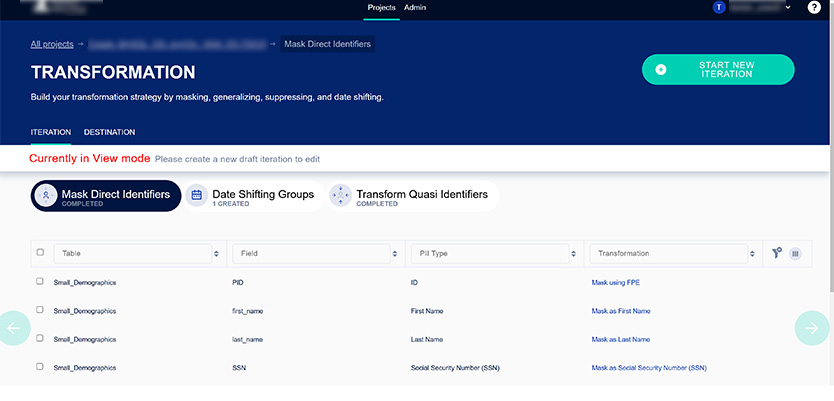

We provided the solution based on the project functionality and what we should achieve.

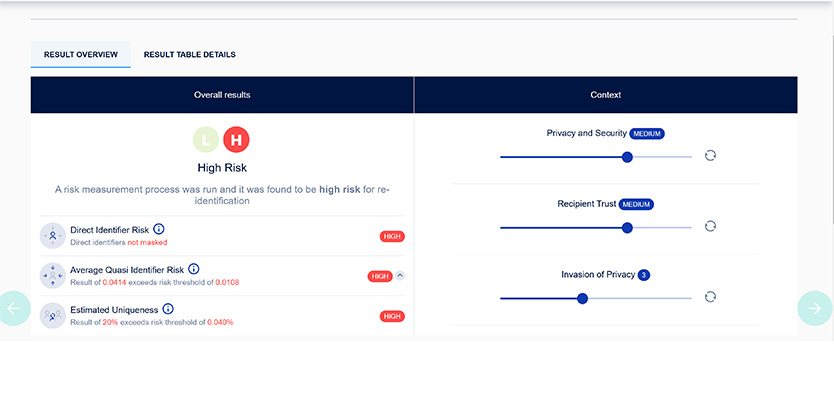

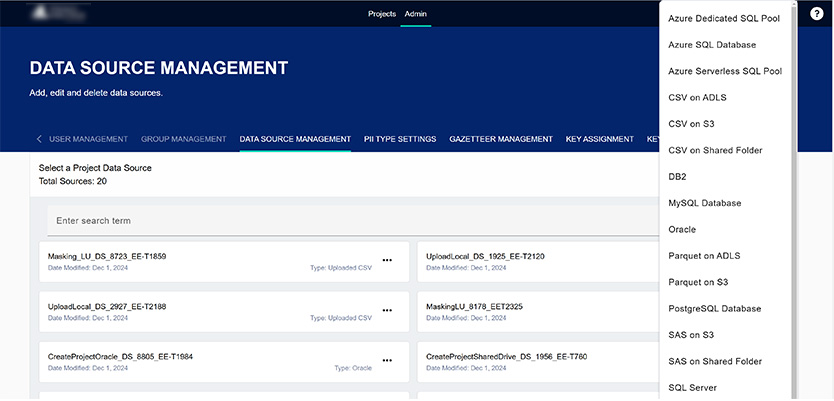

In the case of our initial project, we knew this was a cross-platform project that supported many data sources and complex migration cases. Also, an important part of the app is the verification of PDF reports and CSV data.

A Big Server was bought to keep all environments in one place. For better performance and a supporting server, OS Esxi was installed there, and WM Ware was used as a virtualization operator. Such a solution provides us with great benefits:

- Several OSs within the tested app were installed for testing (like Unix systems: RedHat x-versions, CentOS x-versions, and Windows systems).

- Support for the app’s cluster installations, where we have 1 master and 1+ workers machines.

- Support for testing data sources and data origins, like Oracle, MSSQL, Postgres, DB2, etc

- Because of virtualization, migration efforts were reduced as we just kept the previous version as snapshots

- Jenkins with Automation jobs is also one of the machines on the server.

As noticed above initially the request from the customer was to use Protractor as an Automation framework for testing. Those times it was easy to understand, fast engage and quick to start framework. With all required integrations, like report systems, etc.

Unfortunately, after 2-3 years Google decided to stop supporting the framework and later it was deprecated.

As a result, before its depreciation, an investigation of the best Automation frameworks and imagined efforts should be made to switch to it from Protractor. We decided to use WebdriverIO as a testing framework for Automation.

We can highlight the following benefits of the WebdriverIO:

- JS-based, easy to migrate from Protractor, and support

- Old known and stable framework, not like new modern but forgotten after 5 years Protractor

- Supports all sensitive features we need during the testing of PA products, like parallel execution in several browsers, and integration with the report system, i.e. Jasmine runner.

For Automation purposes, a machine with Jenkins installed was deployed. On a daily basis to be executed, we created jobs like:

- Deploy a fresh version of the app’s product, with/without its Mongo DB clearance

- Execution of Smoke test with a valuable report

- Execution of Regression test with a valuable report

- Have kind utility jobs for updating Docker containers (automation tests are executed in Docker), notify about success/unsuccess suite execution in special Slack channel, and even had a job for executing automation + ZAP and getting the ZAP reports.

—

As for another project’s functionality, it differs a little. For now, this rather young project is approximately a year old. It can be installed only with the help of AWS, and because of that Elinext engineers may need to use other approaches for testing it. We keep the Jenkins environment on the DMZ (Demilitarized Zone) network in AWS.

— Aside from testing for these major two projects, we also helped with several other side projects run by our customer, but these cooperations have ceased to date for various reasons.

—

Most common tests run by our team:

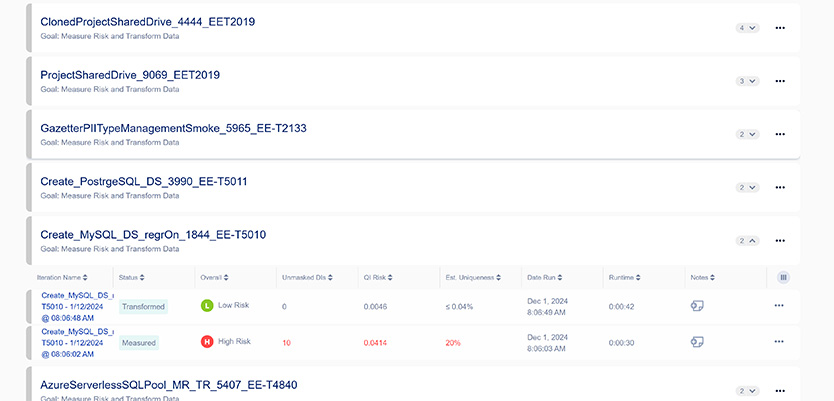

Functional testing: execute test cycles within new test cases against new functionality. Regression testing before the release shipping is also part of this type. Make Migration testing cases to support updating from previous versions to the current ones. UI, API test cases, etc. This part is most likely the prerogative of the Manual QA Team. The Elinext team uses Zephyr (Jira’s plugin) as a placeholder for test cases. Automation testing: all test cases we may automate from the previous type become as part of it. As a result, daily CI executions of automation include e2e tests, API tests, and installation scripts. The Elinext team uses Jasmine for API, and WebdriverIO for UI (in the scope of e2e). We use JavaScript for writing automated tests. Vulnerability testing: Using OWASP ZAP tool for penetration testing.

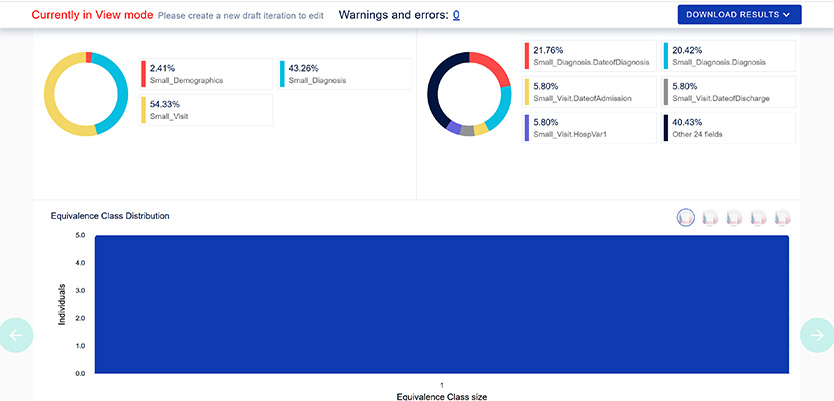

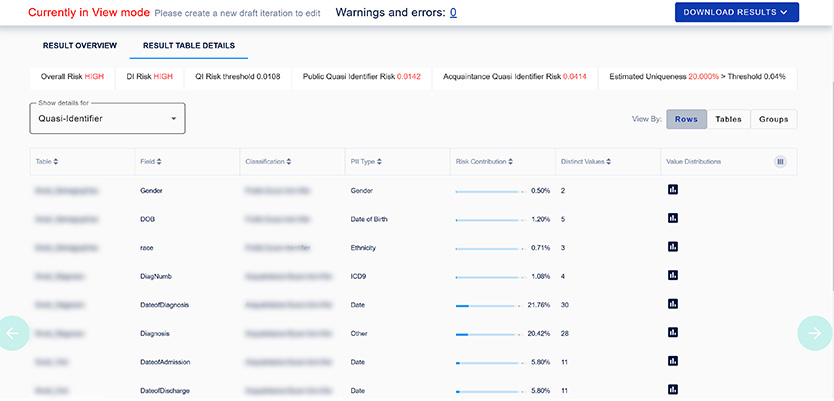

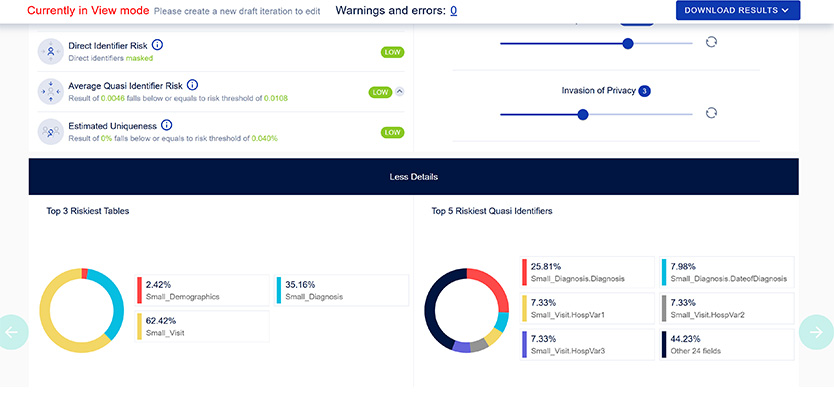

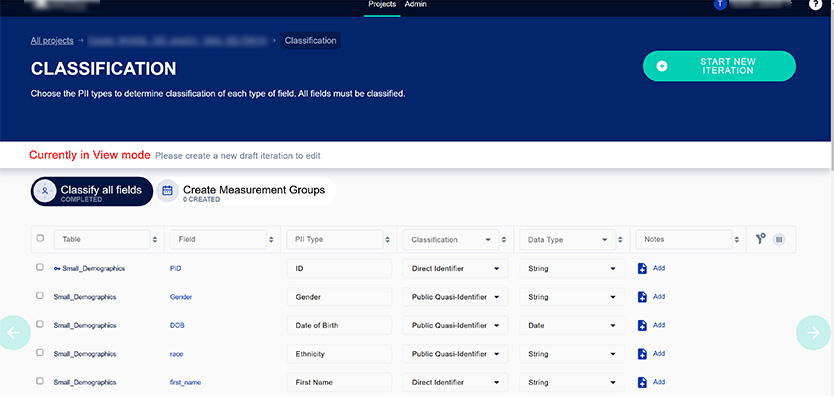

As it was mentioned, our major project is the one for sharing sensitive data without fear of being compromised. You can run the app from the user interface or run it programmatically using the REST API.

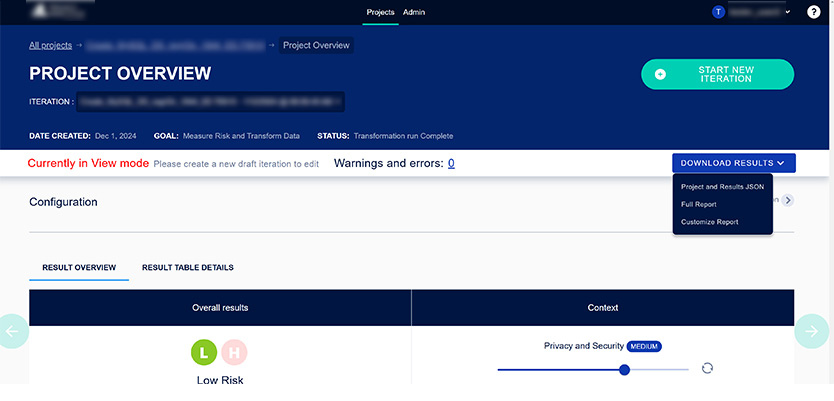

Results

Automation CI helped a lot, since the beginning of us delivering automated software testing services. Critical and catastrophic defects were discovered before the official release. Since the manual team became part of cooperation with our customer, the quality of written test cases and end product stability have increased to the standard of what we see great for qa in healthcare and supported until now.

The level of satisfaction by the Elinext team is best seen through the fact it has now extended from 3 people to 15+ on multiple projects. We can confirm the customer likes our approaches in AQA, QA, and Development.

As for communication, we need to keep a balance between the customer’s requirements and the scopes we may want to test. Everything should be discussed and approved from the customer’s side.

The oldest project we have with the customer is now mostly under our support, not anything more. The releases for these healthcare data analytics solutions became rare, decreasing from 4 times per year to one or two over the course of the year.

Another, younger active project, has releases several times per year(~3-4). We do not exclude the opportunity to expand our cooperation as the customer is interested in our data analytics services.