In the rapidly evolving world of artificial intelligence, the ability to teach machines with minimal data is becoming increasingly important. This is where few-shot and zero-shot learning come into play.

These techniques allow AI models to recognize new objects or perform new tasks with little to no prior training data. Let’s explore how these methods work, their significance, and how they are shaping the future of AI.

What is Few-Shot Learning?

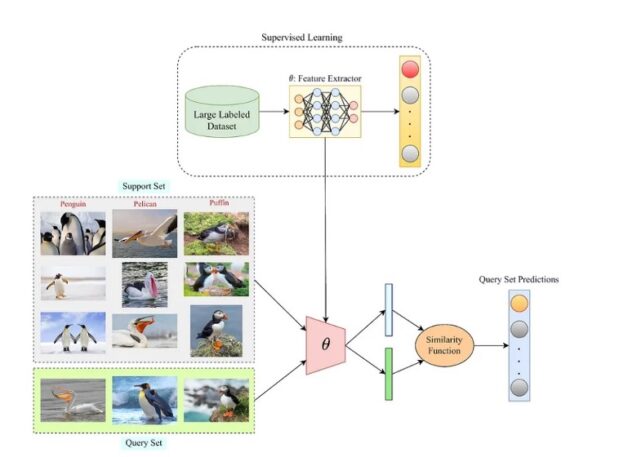

Few-shot learning (FSL) is a powerful machine learning paradigm that aims to build models that can generalize from a small amount of labeled data. This approach is particularly valuable when large annotated datasets are not available, which is common in many real-world applications like medical imaging, wildlife monitoring, and personalized AI.

Traditional machine learning models typically require vast amounts of labeled data to perform well. However, in many real-world scenarios, collecting such large datasets is impractical or even impossible.

Few-shot learning aims to overcome this limitation by leveraging prior knowledge from related tasks to generalize to new tasks with minimal data. For example, imagine training an AI model to recognize different species of birds. Instead of requiring thousands of labeled images for each species, a few-shot learning model might only need five or ten images of each bird to make accurate predictions.

Source: Paperspace

There are typically three types of few-shot learning:

- One-shot learning: The model is trained to recognize patterns with only one example per class.

- Few-shot learning: The model is trained with a small number of examples per class (e.g., 5-10).

- Zero-shot learning: The model can generalize to new classes without having seen any examples of those classes during training.

Why Few-Shot Learning?

Few-shot learning is essential for scenarios where data is scarce, expensive, or time-consuming to collect. Some facts that underscore the importance of FSL include:

- Data Scarcity: In many domains, especially in medicine, labeling data requires expert knowledge. For instance, in radiology, annotating medical images can take hours or days of a radiologist’s time.

- Efficiency: Few-shot learning models can drastically reduce the time and resources needed to deploy a machine learning system, making AI accessible for small companies or research groups with limited data.

Real-World Applications: FSL is used in applications like rare species recognition in wildlife, where only a few images of a species might exist, or in personalized AI, where models need to adapt to individual users based on limited interaction data.

Key Techniques in Few-Shot Learning

- Meta-Learning: Also known as “learning to learn,” meta-learning involves training a model on a variety of tasks so that it can adapt quickly to new tasks with few examples.

- Prototypical Networks: In this approach, a model learns to create a prototype (or average embedding) for each class based on the few available examples. When presented with a new instance, the model compares it to the prototypes and classifies it based on the closest match.

Siamese Networks: Siamese networks are used to compare pairs of inputs and determine if they belong to the same class. By training on pairs of examples, these networks can learn to distinguish between classes with minimal data.

Implementing Few-Shot Learning

To implement few-shot learning, we typically use meta-learning algorithms like Matching Networks, Prototype Networks, or Model-Agnostic Meta-Learning (MAML). Below is a Python example using Prototypical Networks with the torch framework.

Example: Prototypical Networks in PyTorch

Step 1: Import Required Libraries

import torch import torch.nn as nn import torch.optim as optim from torch.utils.data import DataLoader from torchvision import transforms, datasets from torchvision.models import resnet18 import numpy as np

Step 2: Define the Prototypical Network Model

class PrototypicalNetwork(nn.Module):

def __init__(self, embedding_dim):

super(PrototypicalNetwork, self).__init__()

self.encoder = resnet18(pretrained=True)

self.encoder.fc = nn.Linear(self.encoder.fc.in_features, embedding_dim)

def forward(self, x):

embeddings = self.encoder(x)

return embeddings

def prototypical_loss(prototypes, embeddings, labels, n_classes):

distances = torch.cdist(embeddings, prototypes)

labels = labels.view(-1, 1)

labels_onehot = torch.zeros(labels.size(0), n_classes).scatter_(1, labels, 1)

loss = nn.CrossEntropyLoss()(distances, labels_onehot.argmax(dim=1))

return loss

Step 3: Data Preparation

transform = transforms.Compose([

transforms.Resize((128, 128)),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

train_dataset = datasets.ImageFolder(root='path_to_your_data', transform=transform)

train_loader = DataLoader(train_dataset, batch_size=32, shuffle=True)

n_classes = len(train_dataset.classes)

embedding_dim = 128

Step 4: Training the Model

model = PrototypicalNetwork(embedding_dim)

optimizer = optim.Adam(model.parameters(), lr=0.001)

n_epochs = 10

for epoch in range(n_epochs):

model.train()

for i, (images, labels) in enumerate(train_loader):

optimizer.zero_grad()

embeddings = model(images)

# Generate prototypes

prototypes = []

for class_idx in range(n_classes):

class_embeddings = embeddings[labels == class_idx]

class_prototype = class_embeddings.mean(dim=0)

prototypes.append(class_prototype)

prototypes = torch.stack(prototypes)

loss = prototypical_loss(prototypes, embeddings, labels, n_classes)

loss.backward()

optimizer.step()

print(f'Epoch {epoch+1}/{n_epochs}, Loss: {loss.item()}')

Step 5: Evaluation

Evaluation in few-shot learning often involves using N-way K-shot tasks, where the model must correctly classify new examples given a few labeled instances. You can evaluate the model by creating such tasks and measuring accuracy.

# Example evaluation with 5-way 1-shot classification

def evaluate(model, test_loader, n_classes, n_way=5, n_shot=1):

model.eval()

correct = 0

total = 0

with torch.no_grad():

for images, labels in test_loader:

embeddings = model(images)

prototypes = []

for class_idx in range(n_way):

class_embeddings = embeddings[labels == class_idx][:n_shot]

class_prototype = class_embeddings.mean(dim=0)

prototypes.append(class_prototype)

prototypes = torch.stack(prototypes)

distances = torch.cdist(embeddings, prototypes)

predictions = distances.argmin(dim=1)

correct += (predictions == labels).sum().item()

total += labels.size(0)

accuracy = correct / total

print(f'Accuracy: {accuracy * 100:.2f}%')

Few-Shot Learning in Action

One remarkable application of FSL is in facial recognition for security systems. Traditional facial recognition systems require large datasets of labeled faces, which can be impractical for individual users or small organizations. With FSL, a model can be trained with just a few images of a person’s face and still achieve high accuracy in recognizing the person under various conditions.

A study by Snell et al. (2017) showed that Prototypical Networks achieved 49.42% accuracy on the mini-ImageNet dataset using only 1-shot learning, demonstrating the effectiveness of FSL in challenging settings.

The concept of FSL has been widely adopted in industry, with companies like Google and Facebook developing models that leverage FSL for improving personalized user experiences.

Challenges and Future Directions

Despite their promise, few-shot learning comes with challenges. These include the difficulty of ensuring that models generalize well to entirely new tasks and the computational complexity of some meta-learning algorithms. Moreover, developing robust semantic representations that accurately capture the characteristics of unseen classes is an ongoing challenge.

Looking ahead, research in areas like self-supervised learning, transfer learning, and the integration of multimodal data (e.g., combining text and images) is likely to further advance the capabilities of few-shot and zero-shot learning. As these techniques continue to mature, they will play a critical role in making AI more adaptable, efficient, and accessible across various industries.

Conclusion

Few-shot learning is transforming the way we approach machine learning in data-scarce environments. By using models like Prototypical Networks, we can create systems that learn effectively from minimal data, unlocking new possibilities in fields where data collection is challenging. As research and technology continue to advance, we can expect FSL to play a critical role in the broader AI landscape.